Michael Good

Digital Equipment Corporation

Nashua, New Hampshire, USA

Reprinted with permission from Proceedings of the Human Factors Society 29th Annual Meeting (Baltimore, September 29-October 3, 1985), Santa Monica, CA, Vol. 1, pp. 571-574. Copyright © 1985 by the Human Factors and Ergonomics Society. All rights reserved.

Abstract

Iterative design has been strongly recommended as part of a basic design philosophy for building measurably easy-to-use computer software. Iterative design was a major technique for meeting the specified usability goals for Eve, a new text editor for the VAX/VMS operating system. There was no adverse effect on the project schedule. Users’ problems followed similar patterns to those encountered in earlier laboratory experiments on operating systems.

Introduction

Iterative design of computer software has been strongly recommended as part of a basic design philosophy for building measurably easy-to-use computer soft ware (Gould and Lewis, 1985). Several objections have been raised to this idea. For example, some designers and managers fear that iterative design will lengthen development schedules and jeopardize prompt delivery of software. Others fear that iterative design is a completely ad-hoc approach which treats symptoms rather than addressing fundamental problems. Many of these objections are rooted in a lack of experience with iterative design in developing production software systems.

Iterative design was used successfully in developing Eve, a new text editor for the VAX/VMS operating system built using the VAX Text Processing Utility (VAXTPU).1 Our experience in this real-world design problem showed that iterative design can be used to help meet measurable goals for ease of use without any detrimental effect on the project schedule.

Most of the suggestions for iterative refinement came early in the product development process. The suggestions reflected several different categories of interface problems which were observed earlier in laboratory experiments.

Project History

VAXTPU provides a programming language that can be used to build text editors and other applications programs which involve text manipulation. The Eve user interface was programmed entirely in the VAXTPU language, and was developed in parallel with the rest of VAXTPU. This separation between the editing “engine” and the user interface was a key component in minimizing the effect of iterative design on the entire project schedule.

The initial version of Eve was delivered on schedule for an internal version of VAXTPU released in February, 1984. Iterative testing for this first version was confined to laboratory testing of the editor with seven novice users for ease of learning. The tests resulted in minor changes to the basic Eve keypad commands.

More extensive iterative design started in February when Digital employees began to use the Eve interface for their daily text editing. The first users of the early versions were colleagues in our research group. By the middle of April, another version was ready for more widespread distribution throughout Digital. Interested users obtained the editor via Digital’s DECnet network, testing Eve and VAXTPU in Digital facilities through out the world.

Throughout the development process, we collected suggestions based on users’ experiences with Eve and used the suggestions to improve the editor. However, there was a complete version available at any time after the initial version was produced, in case the schedule was shortened for an earlier product release. Field test for VAXTPU began in August and ended in February, 1985. Field test for the updated version of the VAX/VMS operating system began in May, 1985 and ended one month later.

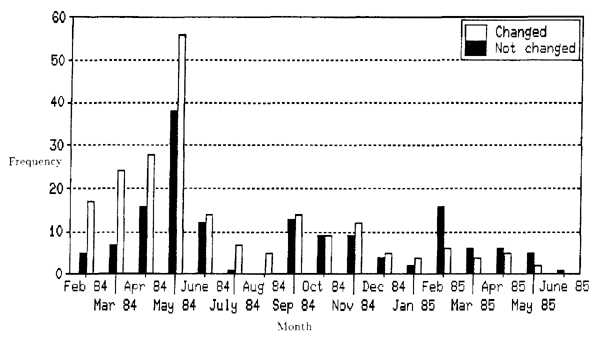

Figure 1 shows how the frequency of Eve suggestions changed during the development process. The “Changed” lines indicate how many suggestions were eventually incorporated into Eve. The “Not Changed” lines indicate how many of each month’s suggestions were not used. Changes usually affected the Eve user interface code, with some changes affecting the on-line help or the printed documentation.

Figure 1: Eve Suggestions by Month

Figure 1: Eve Suggestions by Month

Most of the suggestions were made during the six months before field test began, and these earlier suggestions were incorporated more frequently than were the later suggestions. The greatest number of suggestions came in May, 1984. the month after Eve was first made available to a wide audience. Most of the suggestions made during the last five months, after the completion of the initial VAXTPU field test, were not included in Eve.

Table 1 shows the differences between changes made before and during field test. Overall, 362 specific suggestions for improving Eve were received from 75 different users. Most of these suggestions. including those made during field test, came from Digital employees.

| Changed | Not Changed | Total | |

| Before Field Test | 146 | 79 | 225 |

| During Field Test | 66 | 71 | 137 |

| Total | 212 | 150 | 362 |

Table 1: Suggestions Before and During Field Test

In general, the earlier a suggestion was made, the more likely it was to be incorporated into the interface. Out of the 362 total suggestions. 225 (or 62%) were made before field test began. Eventually 65% of these early suggestions were incorporated into the Eve interface, compared to 48% of the suggestions made during field test.

Eve had met its specified usability goals by September 1984, when it was measured on Roberts’ text editing benchmark tasks for core learning and core use (Roberts, 1979). The core learning benchmark measures ease of learning by novice computer users. The core use benchmark measures speed of use by experienced users of the text editor.

Eve’s score was 4.9 ± .16 minutes/task on the core learning benchmark, and 26 ± .07 seconds/task on the core use benchmark. When compared to data reported by Roberts and Moran (1983), Eve ranked second out of ten editors on the ease of learning benchmark and tied for third out of ten editors on the ease of use benchmark, though most of the differences between editors on these benchmarks are not statistically significant. Eve’s scores on these benchmark tasks were not exceeded by any editor running on a standard 24 x 80 character-cell display.

Categories of Interface Problems

Whiteside, Jones. Levy, and Wixon (1985) described five categories of interface problems which they observed while running laboratory experiments with 165 users on 11 different operating systems, including command, menu, and iconic interfaces. These problems — usefulness of help systems, system feedback, naturalness of input forms, consistency of input forms, and navigation through the system — appeared in each of the three styles of interface.

During Eve’s iterative design, each of the 362 suggestions received was placed into one of these five categories, or into two new categories: one for missing functionality, and another for system failure. Table 2 shows the percentage of Eve suggestions present in each category. The most frequent categories were usefulness of help systems and system feedback, each accounting for 22 to 23% of the suggestions.

| Category | Frequency | Percent |

| Usefulness of help systems | 82 | 23 |

| System feedback | 81 | 22 |

| Naturalness of input forms | 74 | 20 |

| Consistency of input forms | 64 | 18 |

| Missing functionality | 34 | 9 |

| Navigation through the system | 19 | 5 |

| System failure | 8 | 2 |

| Total | 362 | 100 |

Table 2: Problems Reported During Iterative Design

What are some examples of suggestions in each of these categories? Usefulness of help systems included suggestions for improving the wording of particular help or error messages that users found to be confusing. System feedback included several suggestions for changing where the current editing position was placed on the screen after commands were completed. Naturalness of input forms included suggestions for faster movement to the beginning and end of lines. Inconsistency of input forms included a problem in which Eve help command did not accept the same command abbreviations that were accepted in the rest of the editor. Missing functionality included requests for new features. Navigation through the system included a problem in which users who were exploring certain system status areas could not return to their original document. System failure included reports of infinite loops and access violations which stopped the editor from running.

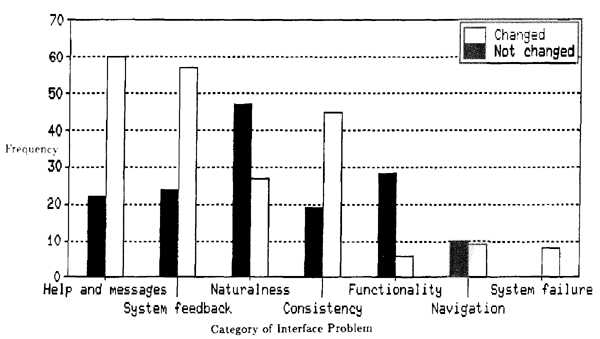

Figure 2 shows what proportion of suggestions in each category were incorporated into Eve. Most of the suggestions in the categories of usefulness of help systems, system feedback, consistency of input forms, and system failure were incorporated into Eve. The less frequent implementation of suggestions in the categories of naturalness of input forms, missing functionality, and navigation through the system reflects that many of these suggestions would require adding new commands or new features to the editor. These types of suggestions were tempered by data on actual text editor usage (Good, 1985) and by a design goal of avoiding unnecessary complexity in the editor interface.

Figure 2: Eve Suggestions by Category of Interface Problem

Figure 2: Eve Suggestions by Category of Interface Problem

Conclusion

Iterative design was used successfully in the design of the user interface of a new text editor for the VAX/VMS operating system. Iterative design was one of several tools used to ensure that the new interface met its specified, measurable goals for ease of learning and speed of use, without any adverse effect on the product schedule. Most of the problems reported by editor users in field conditions during the iterative design process fell into the same categories as problems reported in laboratory experiments of operating system interfaces. These results are encouraging for developing both improved products and improved understanding of problems in user-computer interaction.

Footnote

1VAX, VMS, and DECnet are trademarks of Digital Equipment Corporation.

Acknowledgments

Steve Long, Sharon Burlingame, and Terrell Mitchell designed and implemented the VAX Text Processing Utility. Joan Watson wrote the User’s Guide to Eve, and Tonie Franz wrote the VAXTPU Reference Manual. Paula Levy ran the ease of learning benchmark tests.

References

Good, M. (1985). The use of logging data in the design of a new text editor. In Proc. CHI ’85 Human Factors in Computing Systems (pp. 93-97). New York: ACM.

Gould, J. D. and Lewis, C. (1985). Designing for usability — key principles and what designers think. Communications of the ACM, 28, 300-311.

Roberts, T. L. (1979). Evaluation of computer text editors (Report SSL-79-9). Xerox Palo Alto Research Center: Systems Sciences Laboratory. Doctoral dissertation, Stanford University.

Roberts. T. L. and Moran, T. P. (1983). The evaluation of text editors: methodology and empirical results. Communications of the ACM, 26, 265-283.

Whiteside, J., Jones. S., Levy, P. S. and Wixon, D. (1985). User performance with command, menu, and iconic interfaces. In Proc. CHI ’85 Human Factors in Computing Systems (pp. 185-191). New York: ACM.