Dennis Wixon, John Whiteside, Michael Good, and Sandra Jones

Digital Equipment Corporation

Maynard, Massachusetts, USA

Originally published in Proceedings of CHI ’83 Human Factors in Computing Systems (Boston, December 12-15, 1983), ACM, New York, pp. 24-27. Included here with permission. Copyright © 1983 by ACM, Inc.

Abstract

A measurably easy-to-use interface has been built using a novel technique. Novices attempted an electronic mail task using a command-line interface containing no help, no menus, no documentation, and no instruction. A hidden operator intercepted commands when necessary, creating the illusion of a true interactive session. The software was repeatedly revised to recognize users’ new commands; in essence, the users defined the interface. This procedure was used on 67 subjects. The first version of the software could recognize only 7% of all the subjects’ spontaneously generated commands; the final version could recognize 76% of those commands. This experience contradicts the idea that people are not good at designing their own command languages. Through careful observation and analysis of user behavior, a mail interface unusable by novices evolved into one that let novices do useful work within minutes.

In this research project we attempted to build an interface that would meet a stringent test for ease of use. The interface was to allow a novice user to perform useful work during the first hour of use. However, the interface was to contain no help, no menus, no documentation, and no instruction. The interface was to be so natural that a novice would not have to be trained to use it.

Traditional design methods do not lead to this type of easy-to-use interface. Therefore we had to create a new software interface design method. The method we chose is based on two principles:

- An interface should be built based on the behavior of actual users.

- An interface should be evolved iteratively based on continued testing.

To carry out this method, we created a situation where users were given an electronic mail task which they were to perform on a computer. Since the users received no instruction on how to perform this task on the computer, they were forced to generate their own command language syntax and semantics. User commands which were not accepted by the software alerted a hidden operator who translated them into recognized system commands. This procedure gave users the illusion of dealing only with a computer while allowing them to issue commands spontaneously.

The computer system kept a complete log of each session. We made changes to the user interface based on analysis of these logs. In this way the software was continuously tested and modified; the effectiveness of our changes was measured by subsequent users reactions to the new software. Thus, user input was the driving force behind the design of the software, creating a user-defined interface (UDI).

This method is based on work by Chapanis (1982) and his associates at Johns Hopkins. They applied this type of technique to the design of checkbook- and calendar-management programs. Gould, Conti, and Hovanyecz (1981) have used a hidden-operator scenario to simulate a listening typewriter.

Not counting pilot subjects which we ran to develop the procedure, we ran 67 subjects between April and October, 1982. During this time, the software was being regularly updated to recognize command forms used by previous subjects. The subjects were novice computer users recruited through posters placed in colleges and commercial establishments. Most of the subjects had less than one year’s experience with computers; none had ever used electronic mail. Figure 1 shows the final version of the experimental task.

Welcome. For the next hour or so you will be working with an experimental system designed to process computerized mail. This system is different than other systems since it has been specifically designed to accommodate to a wide variety of users. You should feel free to experiment with the system and to give it commands which seem natural and logical to use.

Today we want you to use the system to accomplish the following tasks:

- have the computer tell you the time.

- get rid of any memo which is about morale.

- look at the contents of each of the memos from Dingee.

- one of the Dingee memos you’ve looked at contains information about the women’s support group, get rid of that message.

- on the attached page there is a handwritten memo, using the computer, have Dennis Wixon get a copy.

- see that Mike Good gets a copy of the message about the keyboard study from Crowling.

- check the mail from John Whiteside and see that Burrows gets the one that describes the transfer command.

- it turns out that the Dingee memo you got rid of is needed after all, so go back and get it.

Figure 1: Task Used in the UDI Experiment

Our most important ease-of-use metric was the percentage of user commands that the software recognized correctly, on its own, without human intervention. We called this percentage the “hit rate.” Over the course of the experiment the software grew in complexity, with the changes inspired by the behavior of previous subjects. Did the addition of these changes improve the hit rate?

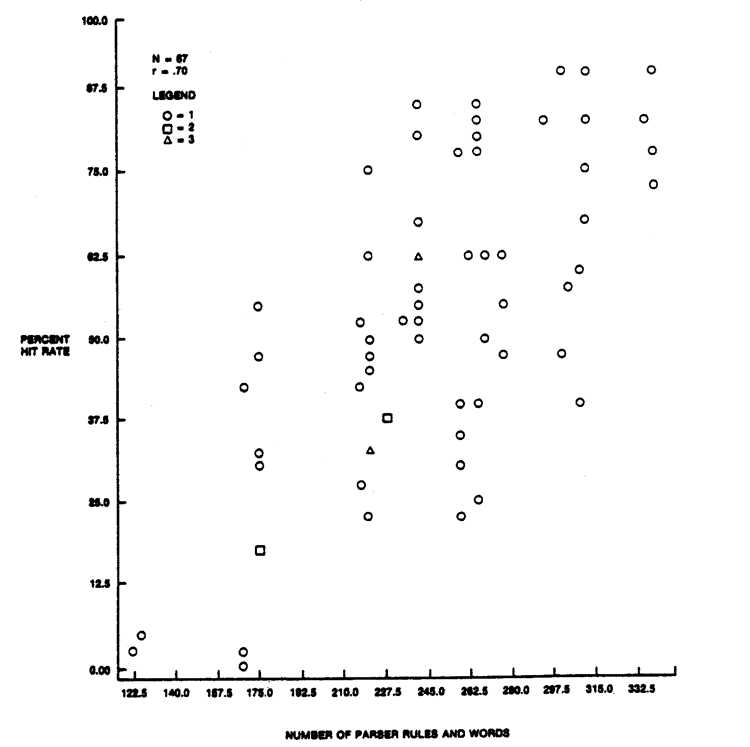

Figure 2 shows the relation between the hit rate and the number of rules and words (synonyms) in the UDI parser. Number of rules and words is a straightforward measure of parser size and complexity. The figure is a scatterplot showing where each subject fell in the space defined by the two variables. Notice the strong positive correlation shown in the plot; the more complex the parser, the higher the hit rate. The correlation coefficient between these two variables was .70. This means that 49% of the total variability in hit rate can be accounted for by the state of the parser.

Figure 2: Relation Between Hit Rate and Number of Parser Rules

Figure 2: Relation Between Hit Rate and Number of Parser Rules

The lowest hit rate we observed was 0% (no commands at all recognized) with an early version of the parser. The highest was 90% which we achieved near the end of testing. The mean hit rate for our first version was 3%, as opposed to 80% for the last version. The scatterplot shows that as the parser grew, the interface got better, as defined by hit rate. Therefore, the method of adding parser rules based on user behavior seems to work. What sort of rules were added and how effective were they individually?

Table 1 presents brief descriptions of the various changes that were made during the experiment. In Table 1, we also attempt to show the relative importance of each change. We show for each change the percentage of commands which required that change in order to be parsed. This data is based on the set of 1070 spontaneous commands generated by all the subjects during the course of the study.

| Change # |

Brief Description | % of commands that require this rule |

* |

|---|---|---|---|

| 0 | Version 1 (starting point) | 7 | |

| 1 | Include 3 most frequently used synonyms for all items | 33 | |

| 2 | Specify msg by any header field (title, name, etc.) | 26 | |

| 3 | Robust message specification: “[memo] [#] number” | 20 | |

| 4 | Allow overspecification of header fields | 16 | |

| 5 | Allow word “memos” | 15 | |

| 6 | Allow word “memo” | 11 | |

| 7 | Allow “from,” “about,” etc. | 9 | |

| 8 | Prompting and context to distinguish send and forward | 9 | |

| 9 | Allow “send [message] to address”; 1st or last names | 7 | |

| 10 | Add 4th and 5th most used synonyms | 5 | |

| 11 | Allow special sequences (e.g. current, new, all) | 4 | |

| 12 | List = read if only 1 message | 3 | |

| 13 | Spelling correction | 3 | |

| 14 | Fix arrow and backspace keys | 2 | |

| 15 | Allow period at end of line | 2 | |

| 16 | Allow “send address message” | 2 | |

| 17 | 70 character line allows entry to editor | 1 | |

| 18 | Include all synonyms for all items | 1 | |

| 19 | Allow phrase “copy of…” | 1 | |

| 20 | Parse l as 1 where appropriate | 1 | |

| 21 | Allow possessives in address field | 1 | |

| 22 | “Send the following to…” allows entry to editor | 1 | |

| 23 | “From: name” and “To: name” enter the editor | 1 | |

| 24 | Insert spaces between letters and numbers (e.g. memo3) | .5 | |

| 25 | Re: allow a colon to follow “re” | .5 | |

| 26 | Allow word “contents” | < .5 | |

| 27 | Allow “and” to be used like a comma | < .5 | |

| 28 | Allow characters near return key at end of line | < .5 | |

| 29 | Allow “send to [address] [message]” | < .5 | |

| 30 | Mr., Mrs., Ms. in address | < .5 | |

| *NOTE: | Percentages exceed 100 because many commands required more than one change to parse. |

||

| Total parsable commands using all changes above = 76% | |||

| Total unparsable commands = 24% | |||

| Total commands = 100% | |||

Table 1: Effect of Software Changes

Of the 1070 total spontaneous commands, only 78 (or 7%) could be handled by the features contained in our starting (Version 1) parser, which accepted only a limited syntax. The changes that were added during the experiment are numbered 1 through 30. The percentage shown for each change is the percentage of commands for which that change was necessary (but not necessarily sufficient) for parsing. The final version of the software could correctly interpret 816 (or 76%) of the 1070 commands.

The changes listed in Table 1 do not have a one-to- one correspondence to the changes made in the parser, nor does the ordering of Table 1 reflect the order in which changes were made. The software was revised by constantly making small changes. Each change in Table 1 usually reflects a number of actual software changes implemented during the course of the experiment.

The contribution of the additional changes was not uniform. Change 1 permitted recognition of the three most widely used synonyms for commands and terms. One third of all commands issued required this change for successful parsing. By contrast, Change 30, allowing users to add “Mr.”, “Mrs.”, or “Ms.” to names, made only a small contribution, being necessary for less then .5% of commands.

The results of this research are encouraging — applied to a standard command-line mail interface, the UDI procedure gave an order-of-magnitude improvement in ease of initial use. The hit rate on the starting version was so low that, without operator intervention, novices using that software could perform almost no useful work in exchange for a full hour’s effort. By contrast, many novices finished our entire task within an hour using the final version of the mail interface. The UDI procedure demonstrates that data collected from novice subjects, when carefully analyzed and interpreted, can be used to produce an easy-to-use interface.

References

Chapanis, Alphonse. Man/computer research at Johns Hopkins. In Information Technology and Psychology: Prospects for the Future, R. A. Kasschau, R. Lachman, and K. R. Laughery, Eds., Praeger Publishers, New York, 1982. Proc. 3rd Houston Symposium.

Gould, John D., Conti, John and Hovanyecz, Todd. Composing letters with a simulated listening typewriter. Proc. Human Factors Society 25th Annual Meeting, October 1981, pp. 505-508.

Copyright © 1983 by the Association for Computing Machinery, Inc. Permission to make digital or hard copies of part or all of this work for personal or classroom use is granted without fee provided that copies are not made or distributed for profit or commercial advantage and that copies bear this notice and the full citation on the first page. Copyrights for components of this work owned by others than ACM must be honored. Abstracting with credit is permitted. To copy otherwise, to republish, to post on servers, or to redistribute to lists, requires prior specific permission and/or a fee. Request permissions from Publications Dept, ACM Inc., fax +1 (212) 869-0481, or permissions@acm.org.

This is a digitized copy derived from an ACM copyrighted work. ACM did not prepare this copy and does not guarantee that is it an accurate copy of the author’s original work.