Michael D. Good, John A. Whiteside, Dennis R. Wixon, and Sandra J. Jones

Digital Equipment Corporation

Nashua, New Hampshire, USA

Originally published in Communications of the ACM, 27 (10), October 1984, 1032-1043. The cover illustration for that issue, by Akio Matsuyoshi, was inspired by this paper. Copyright © 1984 by ACM, Inc.

An extended abstract of this paper appeared in Proceedings of the CHI ’83 Conference on Human Factors in Computing Systems, sponsored by ACM SIGCHI and the Human Factors Society, Boston, December 12-15, 1983, pages 24-27.

Abstract

Many human-computer interfaces are designed with the assumption that the user must adapt to the system, that users must be trained and their behavior altered to fit a given interface. The research presented here proceeds from the alternative assumption: Novice behavior is inherently sensible, and the computer system can be made to adapt to it. Specifically, a measurably easy-to-use interface was built to accommodate the actual behavior of novice users. Novices attempted an electronic mail task using a command-line interface containing no help, no menus, no documentation, and no instruction. A hidden operator intercepted commands when necessary, creating the illusion of an interactive session. The software was repeatedly revised to recognize users’ new commands; in essence, the interface was derived from user behavior. This procedure was used on 67 subjects. The first version of the software could recognize only 7 percent of all the subjects’ spontaneously generated commands; the final version could recognize 76 percent of these commands. This experience contradicts the idea that user input is irrelevant to the design of command languages. Through careful observation and analysis of user behavior, a mail interface unusable by novices evolved into one that let novices do useful work within minutes.

1. Introduction

1.1 Approaches to System Design

There is a basic tension in human-computer interface design. When users have difficulty with a system, there are, generally speaking, two opposing solutions to the problem:

- Adapt the user to the system, or

- adapt the system to the user.

The first approach suggests training the user, providing additional documentation, or generally trying to change the user’s “problematic” behavior in some way. The second approach assumes that the user is behaving sensibly and faults the system. Examples showing this dualism appear in the literature. For instance, Hammer and Rouse have constructed an idealized model for user text-editing performance, based on system capabilities. They observed that actual users deviated from this model and noted the following:

This suggests that these users need training to enhance their understanding of the editor. In fact, such training might be based on imbedding the model presented in this paper into a “training editor” [11, p. 783].

The authors are suggesting intervention to shape the user’s behavior toward what they have defined as an ideal state.

In contrast, Mack put more emphasis on understanding user behavior rather than changing it.

One approach would be to better understand what, if any, expectations new users have about how text-editing procedures work, and then try to use this information in designing editor interfaces [15, p. 1].

Another example of this dualism concerns the potential role of users in system design. Tesler (quoted by Morgan et al.) explained how user suggestions were incorporated in the development of the Apple Lisa interface,

And then we actually implemented two or three of the various ways and tested them on users, and that’s how we made the decisions. Sometimes we found that everybody was wrong. We had a couple of real beauties where the users couldn’t use any of the versions that were given to them and they would immediately say, “Why don’t you just do it this way?” and that was obviously the way to do it. So sometimes we got the ideas from our user tests, and as soon as we heard the idea we all thought, “Why didn’t we think of that?” Then we did it that way [18, p. 104].

This sounds very different from the experience of Black and Moran who noted the following:

A computer system cannot be designed by simply asking computer-naive people what they think it should be like: they are not good designers [2, p. 10].

We discuss elsewhere [23] the philosophical underpinnings of this dualism and its implications for the study of human-computer interaction.

1.2 Goals

In this research we made the assumption that the behavior of new computer users could serve as the driving force for an interactive interface design. That is, we examined the extreme case of adapting the system to the user. Our goal was to build an interface in which design was based on observation and analysis of user behavior with minimal shaping of that behavior. The interface contained no help, no menus, no documentation, and no instruction. Nonetheless, it was to allow novices to perform useful work during the first hour of use.

We did not try to design a complete software development process. We are not claiming that interfaces should omit on-line help, for instance. We wanted an especially severe test case for building a usable interface.

We borrowed a method for achieving these ends from members of Alphonse Chapanis’ laboratory at Johns Hopkins University [4, 5, 12]. In this method, users attempting to do a task type commands into a computer system. If the system recognizes these commands, it will act upon them. If the commands are not recognized, then a hidden human operator translates the user’s command into something the computer does understand. The computer then responds, giving the user the impression that the command was automatically handled.

This procedure maintains the flow of the interaction and allows users to express their commands in a natural way, without receiving an intimidating series of error messages. User behavior is then analyzed and incorporated into software changes. If this analysis is successful, then the software should be able to handle more input on its own without help from the hidden operator. Ultimately the software interface will be capable of accepting and dealing with a high proportion of novice users’ spontaneous commands. The result is a user-derived interface (UDI).

Kelley [12] has used this method to create a calendar management application, whereas Ford [5] has built a checkbook management application. Gould et al. [9] have used a similar methodology to investigate the acceptability of a listening typewriter. We applied this procedure to an electronic mail system.

Before describing the research itself, we deal with four points easily misinterpreted:

- The UDI method uses actual, untutored behavior as the driving force for design. However, this behavior must be analyzed, interpreted, and mapped onto software changes in possibly subtle and nonobvious ways. The theoretical framework we have chosen to explore does assume structure and consistency in user behavior, but it does not assume that the structure is straightforward or obvious. Quite the contrary; it states that individuals are not generally aware of their own mental structures.Behavioral observations can only be a starting point for a design activity. In this study, our software changes were based nearly exclusively on spontaneous behavior by novices. We wanted to see how much the UDI method could accomplish. For actual human interface design, we would advocate that a UDI approach be integrated with deeper theoretical analysis, use of guidelines, and common sense.

- The UDI method does not give rise to a tightly controlled, classic, factorial experiment. In fact, conditions are deliberately altered as the study proceeds, sometimes unsystematically and on the basis of hunches. The goal of such an approach is to develop a holistic understanding of the user’s difficulties in dealing with a system. A deliberate effort is made to trade off precision for scope.

- The success of the procedure is tested every time a new subject is run. The software will perform better on a new iteration only if the new subject exhibits behavior similar to previous subjects and only if the software changes have been appropriately designed and implemented. Thus the method is both empirical and self-testing.

- We believe that iterative testing of users is the single most practical recommendation we have for user interface design. The process of iterative testing should not be confused with the particular methods employed here nor with other specific recommendations. We discuss the generality of iterative testing at the end of the paper.

2. Method

2.1 Subjects

Novice computer users were recruited by placing posters at colleges, universities, and commercial establishments. We wanted subjects who were either professionals or about to be professionals. For the most part, we were successful in recruiting appropriate subjects. None of the 67 subjects run through the UDI procedure had ever used electronic mail. The majority had some college education and had either never used a computer or had used one for less than a year. About half were working in an office environment.

2.2 Equipment

Experiments were run at two different sites: our offices in Maynard, Massachusetts, and at the Digital store in the Manchester, New Hampshire, mall. Different computers were used at these sites. Experiments conducted in Maynard were run on a VAX computer running the VMS operating system. Experiments conducted in Manchester were run on an LSI-11/23 computer mounted in a VT103 terminal. This system ran the RSTS/E operating system.1

Each system used two VT100-family terminals. The keyboard for the subject’s terminal was modified. From past experience, we knew that subjects who had difficulty with the task would often try to use keys that had interesting labels (such as SET-UP). To avoid this problem, we removed several of the keycaps and covered the keyboard with a template. The keyboard arrangement changed during the course of the experiment. The final version removed the nontyping keys from the main keypad and all the numeric keypad keys with the exception of four. These four keys were in the 1, 2, 3, and 5 positions. They were used as arrow keys in the inverted-T arrangement found on Digital’s personal computers.

The UDI software was written in the C programming language [13]. The parsing technique used was one of the oldest available: top-down parsing with backtracking [1]. The main advantage of this technique was that it was easy to implement; its main disadvantage was that it was slow. Some of the trickier parts of interface design were easier for the implementers to address with a simple approach like top-down parsing than they could have been in a more complicated but more efficient technique.

The software was a facade of a mail system. Although creating an illusion of a functioning mail system to the user, no messages were actually delivered. The facade message system represented a sequence of messages as a linked list of C structures. Each structure included the sender, recipients, date, and subject of a message, as well as the VMS file specification where the text of the message was stored. Mail functions were simulated by manipulating these structures and constructing suitable displays. In addition, the software logged the entire experimental session.

The first version of the interface was based on several existing electronic mail systems to provide a realistic starting point for the iterative process. Section 4.2 includes excerpts from the grammars accepted by the first and final versions of the UDI software.

2.3 Procedure

The experimenter briefly described the research work to the subject, explaining that the purpose of the study was to evaluate the ease of use of the computer system and not the subject’s ability. The subjects were told that the results of the session would be kept confidential and that they could leave at any time. Following this, the subject was asked to sign a statement of informed consent and given a questionnaire containing mostly demographic questions.

Since the subjects were familiar with paper mail, the experimenter used it as an analogy in explaining electronic mail. The subjects were told that they could use electronic mail by issuing commands to the computer system. They were told that commands were usually verbs and that command lines were short, consisting of one or more words that were executed by pressing the return key.

The experimenter gave each subject a copy of the task shown in Figure 1. The subjects were told to accomplish the eight items by using the experimental mail system. We devised this task after studying existing mail systems and secretarial mail procedures. We later compared the command frequencies recorded in the UDI experiment to command frequencies reported for several mail systems [3, 8, 22]. The UDI task appears to be fairly representative of electronic mail usage, but it overrepresents forwarding old messages at the expense of sending new messages. The wording of the task was carefully chosen to avoid suggesting specific commands to novice users. A heavy use of the passive voice resulted.

| Welcome. For the next hour or so you will be working with an | ||

| experimental system designed to process computerized mail. This system is | ||

| different than other systems since it has been specifically designed to | ||

| accomodate to a wide variety of users. You should feel free to experiment | ||

| with the system and to give it commands which seem natural and logical to | ||

| use. | ||

| Today we want you to use the system to accomplish the following tasks: | ||

| 1) | have the computer tell you the time. | |

| 2) | get rid of any memo which is about morale. | |

| 3) | look at the contents of each of the memos from Dingee. | |

| 4) | one of the Dingee memos you've looked at contains information | |

| about the women's support group, get rid of that message. | ||

| 5) | on the attached page there is a handwritten memo, using the | |

| computer, have Dennis Wixon receive it. | ||

| 6) | see that Mike Good gets the message about the keyboard | |

| study from Crowling. | ||

| 7) | check the mail from John Whiteside and see that Burrows gets | |

| the one that describes the transfer command. | ||

| 8) | it turns out that the Dingee memo you got rid of is | |

| needed after all, so go back and get it. | ||

NOTE: The above is an exact replica of the task given for the UDI experiment.

Figure 1. Task Used in the UDI Experiment

Using paper mail as an analogy, the experimenter reviewed each item with the subject. The subjects were told to accomplish these items by issuing commands that seemed reasonable to them. The subjects were never told what commands the program understood. The items were to be carried out in the order presented on the task.

The experimenter explained how to use the computer terminal’s keyboard, demonstrating the use of the delete key to erase typographical errors and the return key to terminate commands. Midway through the research, a demonstration was added to help the subjects understand how to issue commands. The experimenter had the subject type the word “time,” which gave back the current time. This demonstration showed the subjects how to issue a command and gave them an example of feedback from the system.

The subjects were told that everything they typed was being recorded and that there was a monitor set up in another office from which the experimenter could view what was happening on the subject’s video screen.

The experimenter did not remain in the room during the task but could be reached at a phone number that was left with the subject. The subjects were told to call only if they were completely unable to proceed. When such calls took place, the experimenter tried to direct the subject without revealing any commands.

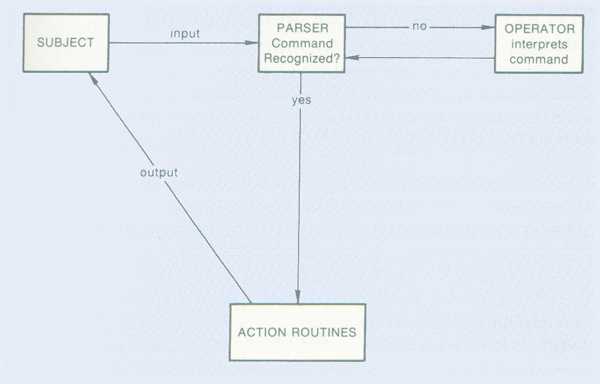

Conducting the experiment required an operator, hidden from the subject, who dealt with the software. The experimental arrangement is shown in Figure 2.

Figure 2. Experimental Arrangement for the UDI Project

Figure 2. Experimental Arrangement for the UDI Project

The subject attempted to perform the assigned task by typing commands. If the subject’s command was recognized by the parser, the software responded to the subject directly and the hidden operator was not involved. If the commands could not be recognized, the subject did not receive an error message right away. Rather, the hidden operator was notified.

The operator’s task was to make a rapid decision about whether, in his or her opinion, the subject’s command was simple enough to be parsed. If the answer was yes, the operator typed the appropriate command. At this point, the software acted as though the operator’s command had come from the subject.

Alternatively, the operator could decide that the subject’s command could not he automatically parsed. In this case, the operator could either confirm the error message provided by the system and send it to the subject or send a preset error message to the subject.

This arrangement maintained a rapid flow of dialogue between the subject and the system. The operator was provided with an image of the subject’s screen via a video monitor connected to the subject’s terminal. This let the operator anticipate the subject’s command and respond more rapidly, giving the subject the illusion of dealing with a completely automated system.

Following the completion of the task, the experimenter spoke briefly with the subject and administered a second questionnaire, dealing primarily with the subject’s attitudes toward the system. Each subject was paid $25 and given a special coffee mug (“I survived a human factors experiment”).

The logs from each experimental session were carefully analyzed at regular intervals. This analysis provided the basis for software changes that would let the parser recognize command constructions used by the subjects. The ad hoc decisions made by the operator during a session were not the basis for the software changes.

3. Results

3.1 Examples from Logs

To provide the reader with a view of the software from the user’s viewpoint, we have reproduced a portion of two logs below. In these excerpts the subjects are at tempting to get rid of any memo that is about morale. Example 1 is from a subject who was working with the earliest version of the parser and illustrates failure to delete a memo:2

| MAIL) | morale |

| Stopped understanding at "morale" in message. | |

| MAIL) | destroy morale memo |

| Stopped understanding at "destroy" in message | |

| MAIL) | erase morale memo |

| Stopped understanding at "erase" in message. | |

| MAIL) | throw away memo |

| Stopped understanding at "throw" in message. | |

| MAIL) | clear morale memo |

| Stopped understanding at "clear" in message. | |

Example 1. Failure to Delete a Memo

The first three commands would have been accepted in the latest version of the parser: Destroy and erase are among the five most frequently used synonyms for the delete command. Example 2 shows a subject trying to do the same portion of the task using the latest version of the parser and shows the successful deletion of a memo:

| Mail: | morale | ||||

| Message #6 | |||||

| From: | John Whiteside | ||||

| To: | Sandy Jones | ||||

| Date: | 22-Jul-82 | ||||

| Subject: | Morale | ||||

| "At the highest level of self-realization, the distinction between work and | |||||

| life disappears. Your real life doesn't start after five and on weekends. | |||||

| The secret is that work is really an opportunity to play adult games with | |||||

| real money, real resources, and real people. When what you do coincides | |||||

| with what you are, you can have it all." | |||||

| - | Susan Jacoby | ||||

| John | |||||

| Mail: | delete memo on | ||||

| Command too complicated. | |||||

| Mail: | delete memo 6 | ||||

| OK - message 6 discarded. | |||||

Example 2. Successfully Deleting a Memo

3.2 Software Performance

Our most important metric was the percentage of user commands that the software recognized, on its own, without human intervention. All commands typed by the subjects were placed into one of three categories. Each command had either been accepted or rejected by the parser. If the command had been accepted, then it was classified as being interpreted correctly or incorrectly. Correctly parsed commands formed the basis for our measure of the parser’s effectiveness, the hit rate. The hit rate was derived by dividing the number of correctly parsed commands by the total number of commands issued.

Over the course of the experiment, the software grew in complexity, with changes inspired by the behavior of previous subjects. Did the addition of these changes improve the hit rate?

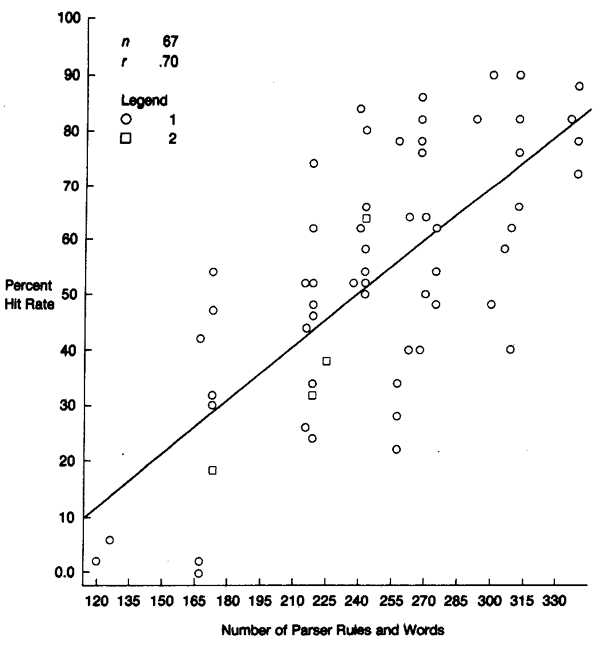

Figure 3 shows the relation between the hit rate and the size of the UDI parser. The size was measured by the number of rules (or productions) in the UDI parser, including synonyms. This is a straightforward measure of parser size and complexity. Figure 3 is a scatterplot showing where each subject fell in the space defined by the two variables, with hit rate graphed on the vertical axis and number of rules graphed on the horizontal axis.

FIGURE 3. Relation Between Hit Rate and Number of Parser Rules

FIGURE 3. Relation Between Hit Rate and Number of Parser Rules

Notice the strong positive correlation shown in the plot; the more complex the parser, the higher the hit rate. The correlation coefficient between these two variables was 0.70. This means that 49 percent of the total variability in hit rate can be accounted for by the size of the parser. Subjects who used the more complex parser were indeed issuing commands that required rules that had been added to the parser based on earlier subject behavior.

The lowest hit rate we observed was 0 percent (no commands at all recognized) with an early version of the parser. The highest was 90 percent, achieved near the end of testing. The mean hit rate for the first version was 3 percent, as opposed to 80 percent for the last version. The scatterplot shows that as the parser grew, the interface got better, as defined by hit rate.

The scatterplot also shows differences among subjects who used similar versions of the parser. Throughout the parser’s development, there was a wide range of subject performance at each point. The exceptions to this generalization are the earliest versions of the parser, when performance was uniformly bad, and the last version of the parser, when performance was uniformly good. The graph shows that, as the parser developed, the distribution of successfully parsed commands, as a whole, tended to move upward.

3.3 Effect of Software Changes

Table I presents brief descriptions of the various changes that were made during the experiment. We attempt to show the relative importance of each change by showing the percentage of commands that required that change in order to be parsed. The data are based on the set of commands generated spontaneously by all the subjects throughout the study.

| Change | Brief Description | Percent of Commands that Require this Rule* |

|---|---|---|

| 0 | Version 1 (starting point) | 7.0 |

| 1 | Include 3 most frequently used synonyms for all items | 33.0 |

| 2 | Specify message by any header field (title, name, etc.) | 26.0 |

| 3 | Robust message specification: “[memo] [#] number” | 20.0 |

| 4 | Allow overspecification of header fields | 16.0 |

| 5 | Allow word “memos” | 15.0 |

| 6 | Allow word “memo” | 11.0 |

| 7 | Allow “from,” “about,” etc. | 9.0 |

| 8 | Prompting and context to distinguish send and forward | 9.0 |

| 9 | Allow “send [message] to address”; first or last names | 7.0 |

| 10 | Add fourth and fifth most used synonyms | 5.0 |

| 11 | Allow special sequences (e.g. current, new, all) | 4.0 |

| 12 | List = read if only 1 message | 3.0 |

| 13 | Spelling correction | 3.0 |

| 14 | Fix arrow and backspace keys | 2.0 |

| 15 | Allow period at end of line | 2.0 |

| 16 | Allow “send address message” | 2.0 |

| 17 | 70-character line allows entry to editor | 1.0 |

| 18 | Include all synonyms for all items | 1.0 |

| 19 | Allow phrase “copy of…” | 1.0 |

| 20 | Parse l as 1 where appropriate. | 1.0 |

| 21 | Allow possessives in address field | 1.0 |

| 22 | “Send the following to…” allows entry to editor | 1.0 |

| 23 | “From: name” and “To: name” enter the editor | 1.0 |

| 24 | Insert spaces between letters and numbers (e.g. memo3) | 0.5 |

| 25 | Re: allow a colon to follow “re” | 0.5 |

| 26 | Allow word “contents” | < 0.5 |

| 27 | Allow “and” to be used like a comma | < 0.5 |

| 28 | Allow characters near return key at end of line | < 0.5 |

| 29 | Allow “send to [address] [message]” | < 0.5 |

| 30 | Mr., Mrs., Ms. in address | < 0.5 |

| Total parsable commands using all changes above | = 76 percent | |

| Total unparsable commands | = 24 percent | |

|

|

|

|

| Total commands | = 100 percent | |

| * Note. Percentages exceed 100 because many commands required more than one change to parse. |

||

Table I. Effect of Software Changes

To build the set of spontaneous commands, we went over the logs and collected everything that subjects typed into the system. We then excluded three types of input: repeated attempts to perform a single command, commands that parroted the instructions, and responses to system prompts. This left us with a set of 1070 commands generated over the course of the experiment.

Of the 1070 total spontaneous commands, only 78 (or 7 percent) could be handled by the features contained in our starting (version 1) parser. The final version of the parser incorporated 30 changes and could correctly interpret 816 (or 76 percent) of the 1070 commands.

The contribution of the changes was not uniform. The most effective change permitted recognition of the three most widely used synonyms for commands and terms. One-third of all commands issued required this change for successful parsing. By contrast, the least effective change allowed users to add “Mr.,” “Mrs.,” or “Ms.” to names. This change was necessary for less than 0.5 percent of the commands.

There is interdependence between these changes; many commands require a combination of changes to parse. For example, the command “discard memo 5” requires change 1 (including three synonyms) to parse the word “discard,” and change 3 (robust message specification) to parse “memo 5.” Of the 816 parsable commands, 399 (or 49 percent) required more than one change to parse.

The 254 spontaneous commands that could not be parsed fell into several categories. Many of these commands were incorrectly interpreted; that is, the parser accepted the command but performed an incorrect action. For example, some subjects issued the command “To Dennis Wixon” in order to send a message to Dennis Wixon. The parser interpreted this command as “list the messages sent to Dennis Wixon.” Similarly, the parser interpreted the word “print” as a command to print a hard-copy version of a message, but several subjects intended it to print a message on the screen.

Other syntactic problems included

- verbs that were not the first word in a command (“dingee memo 5 remove”);

- words that were run together (“read dingeememos”);

- commands with extra words, phrases, or punctuation, such as the phrase “from files” in “delete this memo from files.”

3.4 User Attitudes

In addition to software performance, we measured user attitudes toward the system using a semantic differential [19]. A semantic differential is a series of scales anchored by bipolar adjectives. It measures three components of attitudes: evaluation (e.g., “good-bad”), potency (e.g., “large-small”), and activity (e.g., “fast- slow”). The subject is asked to rate a particular word or concept on a 7-point scale (-3 to 3) for each adjective. For instance, a subject might rate the term “dinosaur” as being extremely large, quite slow, and neutral with reference to being good or bad. The specific questionnaire used in this study was taken from Good [7].

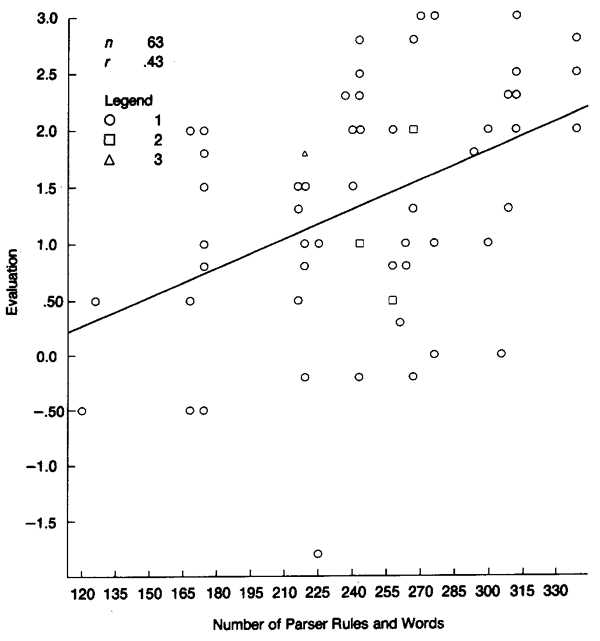

The relation between parser size and the evaluation component of the semantic differential is shown in Figure 4. The correlation coefficient is positive (0.43); subjects liked the system better as more rules were added to the parser. Although subjects were virtually neutral toward the first version of the parser (0.24), they liked the final version (2.36). Four subjects did not complete all the items in the semantic differential, lowering N to 63.

Figure 4. Relation Between Evaluation and Hit Rate

Figure 4. Relation Between Evaluation and Hit Rate

Subjects also saw later versions of the parser as more active than earlier versions (r = 0.44). The correlation between parser size and potency was very low (r = 0.16).

3.5 Experienced Users

After the interface was completed, eight experienced computer users were asked to perform the experimental task. Their data were not included in the previous figures, and their responses were not used to develop the parser.

These subjects completed the task quite quickly, with a mean time of 9 minutes and times ranging from 4 to 16 minutes. All of these subjects liked the system. Their mean evaluation score was 1.75 with a range from 0.50 to 2.75. Experienced users employed many of the same features that had been useful to novices. An interface that was derived from the commands issued by novices was easy for experienced users to learn and was well liked by them.

4. Discussion

4.1 Summary of Results

A mail interface unusable by novices evolved into one that let novices do useful work within minutes. In particular,

- the hit rate for subject’s commands improved by an order of magnitude as the UDI software became more sophisticated,

- the final version of the UDI software was able to recognize over three-fourths of the subjects’ spontaneous commands,

- subjects were able to complete meaningful and useful work within an hour without documentation or a help facility,

- the hit rate and subjects’ evaluation of the UDI software were correlated with the number of rules in the UDI parser, and

- experienced computer users were able to learn the system easily.

The 76 percent hit rate was high enough to allow subjects to do useful work within an hour without any on-line assistance. Errors were much more infrequent than in traditional systems and were easier to correct.

4.2 UDI as a Design Process

Transforming observed user behavior into software changes is one of the most labor-intensive parts of the UDI process. We did not simply add a new grammar rule for each command that a user typed in. Instead, we analyzed the logs to discover more general changes that could be made to the software. These changes could be simple or complex, but the iterative process as a whole involved considerable analysis.

For example, it became apparent very early in our testing that subjects wanted to specify numbered messages in a more flexible manner. Instead of typing “3,” they might type things like “#3” or “memo 3.” We added a new set of rules to the grammar to make message specification more robust. More rules were added later in our testing as we observed further variations of this behavior, such as “interoffice memo #3.” In the first version of the UDI parser, messages had the following syntax (these grammar descriptions are based on Ledgard’s version of BNF [14], with words in boldface type indicating terminals):

| message | ::= number |

| number | ::= digit ... |

| digit | ::= l | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 0 |

In the final version, the syntax for messages included many more rules:

| message | ::= | first | last | current-noun |

| | | [#] number | |

| | | [interoffice] memo-noun [#] number | |

| current-noun | ::= | this | above | it | current |

| memo-noun | ::= | memo | message | letter |

| | | msg | item | |

| number | ::= | digit ... |

| digit | ::= | l | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 0 |

These changes did not require extensive analysis, but other problems were much more complex. The commands for sending new messages and forwarding old messages proved especially troublesome.

In the original grammar, “send” was used to create a new message and mail it to another person, whereas “forward” was used to mail a copy of a previously received message to another person. However, subjects usually used “send” to refer to both functions. For instance, our eighth subject typed “send message to Dennis” to create a new message and “send copy command to Burrows” to forward a message.

The syntax for forwarding messages also had several variations. The command “mail Whiteside letter 1 to Burrows” was intended to forward a message from Whiteside to Burrows, but “send good 16” was intended to forward message 16 to Good. Eventually we implemented a complex set of rules that could successfully interpret most of the commands to send and forward messages.

The UDI method changes the role of designers. Instead of spending a lot of time in discussions and debates about how hypothetical users will expect the system to work, a UDI technique lets designers see for themselves how these users expect the system to work. Designers are then challenged to build a system that fulfills user expectations to the greatest extent possible.

Since this experiment was an extreme test of the UDI technique, our initial version of the software did not incorporate existing guidelines for user interface design. We believe that guidelines can improve the initial version of an interface substantially and thus reduce the amount of iteration required to reach a particular level of ease of use.

Iterative testing can be used very successfully on its own, without including the technique of using a hidden operator. We believe that this type of testing is essential for building easy-to-use interfaces. Testing a prototype user interface will expose bugs in the interface just as testing will reveal bugs in other parts of the software. Gould and Lewis [10] include early testing and iterative design as two of their four key principles of designing for usability.

Prototyping a user interface as early as possible allows for the most extensive testing. Major flaws in the user interface might well affect the design of the rest of the software. Unfortunately, in many cases a large part of the entire software system is written before a testable prototype is ready. Miller and Pew [17] have argued for testing representative users throughout the design cycle. For instance, the designers of Apple’s Lisa system tested several parts of the interface on computer-naive office workers, incorporating the results into a revised interface [18, 21]. Tesler [21] is convinced that early testing of prototype software is an essential part of the design process, and we agree.

4.3 Guidelines for Dialogue Design

The UDI technique is a fruitful source for testing and suggesting guidelines for user interface design. The best design guidelines do not appear out of thin air; they are based on a combination of experimentation, observation, and experience with existing systems. By observing user behavior in a situation where users had a minimum number of preconceptions about how the system should behave, UDI provides a particularly rich source of guidelines for ease of learning.

4.3.1 Synonyms

One important contributor to parser recognition of user commands was allowing synonyms for words that the parser already recognized. Users generated a variety of synonyms in their efforts to accomplish the task. Tables II and III show synonyms that were spontaneously used for the delete and retrieve functions. In general, a few words accounted for a high percentage of all verbs generated. Including the three most common synonyms was the syntactic change required most often to parse commands correctly; it was needed in one-third of all the subjects’ spontaneous commands.

| Word | Number of Times Used |

Cumulative Percentage |

|---|---|---|

| delete | 96 | 67 |

| discard | 14 | 80 |

| erase | 8 | 83 |

| eliminate | 5 | 86 |

| destroy | 5 | 90 |

| remove | 4 | 92 |

| omit | 4 | 95 |

| disregard | 2 | 97 |

| – | 2 | 98 |

| drop | 1 | 99 |

| dismiss | 1 | 99 |

| unfile | 1 | 100 |

| Total | 143 | 100 |

Table II: Synonyms Generated for Delete

| Word | Number of Times Used |

Cumulative Percentage |

|---|---|---|

| retrieve | 26 | 37 |

| return | 14 | 57 |

| recall | 14 | 77 |

| add | 3 | 81 |

| relocate | 2 | 84 |

| restore | 1 | 86 |

| reinstate | 1 | 87 |

| incorporate | 1 | 89 |

| show | 1 | 90 |

| cancel | 1 | 91 |

| need | 1 | 93 |

| put in | 1 | 94 |

| do not discard | 1 | 96 |

| enter | 1 | 97 |

| copy | 1 | 100 |

| send | 1 | 100 |

| Total | 70 | 100 |

Table III: Synonyms Generated for Retrieve

The importance of synonyms was discussed by Furnas et al. [6]. They provided a series of models for estimating the likelihood of people being able to guess the names of objects under various conditions. In the domains that they studied, Furnas et al. found that the probability of any two people agreeing on the same name for a given object ranged from 0.07 to 0.18. If one of these names is recognized by a computer, then there is an 80 to 90 percent likelihood that the user will fail to guess the computer’s name for an object. This model assumes that the names chosen by the computer system designer are representative of names chosen by the user population.

The probability of guessing the correct name would increase by a factor of two if the computer recognized the name most commonly chosen by users. Adding synonyms would increase the likelihood of success almost in proportion to the number of synonyms added, for the first few synonyms.

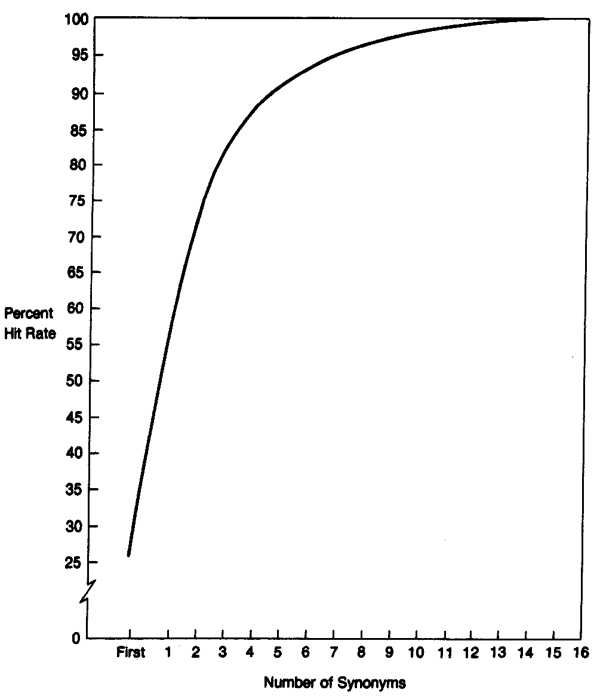

How do the predictions made by Furnas et al. match our experience? Figure 5 shows the effect of adding synonyms to the UDI software, based on the 628 spontaneous commands that used verbs. The names we initially chose for the UDI commands were based on the names used in several electronic mail systems. Choosing the most frequently used names instead of the original names would have doubled the success rate. Adding synonyms would increase the success rate still further, with the greatest increase coming with the three most common synonyms. Thus the effect of choosing names based on user behavior followed the model quite well.

Figure 5: Effect of Adding Synonyms

Figure 5: Effect of Adding Synonyms

The model developed by Furnas et al. [6, p. 1770] would predict that the original names would be chosen by subjects about 40 percent of the time. In fact, these names were used only 26 percent of the time, suggesting that the intuitions of designers do not match the intuitions of novice computer users. For example, the most frequently used command had a name that subjects never used.

4.3.2 Flexible Description

After the addition of synonyms, the changes that had the greatest effect were those that let the subjects describe messages in a more flexible way. Subjects who wanted to see a message or a sequence of messages would refer to any of the fields in the message header, including the subject, sender, date, and message number. Sometimes more than one field would be included. Subjects would sometimes use function words like “from” and “about,” but not as frequently as we had expected.

The successful changes allow

- specifying a sequence of messages by typing the contents of any of the message’s header fields (message number, sender, date, or subject) without requiring any function words such as “from” or “about”;

- preceding message numbers with the # sign and/or the word “memo” (and its synonyms);

- specifying more than one message field, in any order, without requiring function words;

- including the word “memos” (or a synonym) in the sequence specification.

After allowing for synonyms, these were the most frequently used changes in the parser, as was shown in Table I.

4.3.3 Mimicry

When faced with an unfamiliar system, people will use any clues they are given. We designed the task so that subjects would not parrot the instructions back to the machine. The presence of a key labeled “DELETE” probably contributed to the high frequency with which subjects used that word as a command to remove messages. Another form of mimicry can be made to work to the designer’s advantage: people’s mimicry of what they see on the screen.

Interestingly, this phenomenon has not been reported in the literature. However, we have observed it repeatedly and in different contexts. Perhaps the reason other investigators have not found similar phenomena is that they have either given subjects extensive tutorials or have had subjects do more restrictive tasks,

One of the most common uses of mimicry occurred when subjects saw a listing of the messages and then typed all or part of the listing for the message that they wanted to read. Allowing flexible descriptions made this work. Example 3 shows this type of mimicry.

| Mail: | Memos | ||

| # | From | Date | Subject |

| 1 | John Whiteside | 29-Apr-81 | Transfer Command |

| 2 | Julie Dingee | 4-Jan-82 | Staff Meetings |

| 3 | Julie Dingee | 15-Feb-82 | Distribution List |

| 4 | John Whiteside | 22-Feb-82 | Morale |

| 5 | Bill Zimmer | 23-Feb-82 | Meeting |

| 6 | Dennis Wixon | 4-Mar-82 | Tops-20 Task |

| 7 | Julie Dingee | 4-Mar-82 | Conference Room |

| 8 | Bill Zimmer | 10-Mar-82 | Distribution |

| 9 | John Whiteside | 11-Mar-82 | Various |

| 10 | Michael Good | 12-Mar-82 | Editor People |

| 11 | Dennis Wixon | 17-Mar-82 | Keyboard Study |

| 12 | Michael Good | 18-Mar-82 | Editor People |

| 13 | Celeste Magers | 23-Mar-82 | LSI Scoring |

| Mail: | |||

| Mail: | Julie Dingee 4-Jan-82 Staff Meetings | ||

Example 3: Mimicry

One important conclusion to be drawn from the mimicry by subjects is that system messages should be in the same basic style as the input language, assuming that the input language is reasonable in other respects. When creating the messages that get sent to users, the designer should look out for parts of the message that might appear to be reasonable input. A rule of thumb is that if you put something on the screen you should not be surprised if it comes back to you in some form as input. Testing is the best way to uncover the things that you might reasonably, but incorrectly, think that no one would ever imitate.

4.3.4 Limitations of this Research

Because this procedure is new and has not been tested in many different settings, there are limitations that arise and that should be kept in mind in an evaluation of this research:

1. We have studied only one application, electronic mail. The UDI procedure has also been used by Ford and Kelley to create checkbook- and calendar-management interfaces, respectively.

For UDI to work, subjects must be familiar with the problem area. People are used to dealing with mail, checkbooks, and calendars. All of these applications represent tasks that novice users can readily understand in that they all have straightforward analogies to paper-based systems. Many other office-based functions would fall in this area. In contrast, it would not be a good idea to use the UDI procedure with novice subjects in order to design a debugger, because a debugger- has no parallel in the noncomputer world.

To date, UDI has been used in applications that have a relatively small domain of objects and operations. Whether UDI can be used successfully in a larger system is an open question.

The final mail interface was highly dependent on the specific task that we gave the subjects to perform. A different script might have given rise to a different interface.

2. This experiment studied ease of initial use rather than long-term usability. Many people believe that there is a trade-off between ease of learning and ease of daily use. Data from Roberts and Moran’s evaluation of nine different text editors [20] indicated exactly the opposite. There was a very high positive correlation between speed of learning by novices and speed of use by experts.

We believe that novices make the best subjects for a UDI procedure, because they are more likely to produce commands spontaneously. Experienced users are more likely to try commands that they are familiar with on their own systems. Designers can also accommodate experienced users by such measures as including appropriate jargon as synonyms. In our final testing, experienced computer users were able to perform our task in very short order. We do need to study ease of daily use, but our preliminary data give us reason for optimism.

3. Though we are claiming to have a user-derived interface, the UDI method requires an initial prototype that is not user derived. This is not a problem as long as the initial prototype is designed to minimize the number of interface features that are unchangeable. Certain fundamental parts of the interface may have to be “wired-in” in order to get a prototype running. In our experiment, the main wired-in feature was the command-line-oriented nature of the interface. It would have been very difficult to change to menu-oriented or direct-manipulation interface styles during the course of the experiment.

The UDI method is not intended to determine the functionality of a system. It is intended as a way to build an interface for a system of a given functionality. In some cases the distinction between interface and functionality may be blurred. For instance, a few UDI subjects attempted to recall messages that they had already sent (an undo function for the send command). A few other subjects expected the UDI software to keep a log of all outgoing mail, in accordance with standard secretarial practice. Both these functions make the send command easier to use by adding the ability to check for and correct mistakes, but they do not add any really new functional capabilities. Some user-oriented design techniques are being explored that may help in determining system functionality more in accordance with user needs [16, 21].

4.3.5 Conclusions

The initial results of this research are encouraging. Applied to a standard command-line mail interface, the UDI procedure gave an order-of-magnitude improvement in ease of initial use. The hit rate on the starting version was so low that, without operator intervention, novices using that software could perform almost no useful work in exchange for a full hour’s effort. By contrast, many novices finished our entire task within an hour using the final version of the mail interface. The UDI procedure demonstrates that data collected from novice subjects, when carefully analyzed and interpreted, can be used to produce an easy-to-use interface.

There are really two aspects to the UDI method: iterative design incorporating feedback from user behavior and the mechanism of intervention by a hidden operator. The full UDI technique, including the use of a hidden operator, is subject to the restrictions mentioned in the preceding section. The problem domain should be relatively small. The subjects should be familiar with the problem area but unfamiliar with computer applications to this area.

We believe that iterative design, by itself, should be universally applied to the design of user interfaces. In contrast to using the full UDI technique, a designer might build a more detailed prototype using applicable user interface design guidelines. The prototype would then be tested early in the design cycle on representative users. Testing could continue throughout the development process, although the size of the changes that can be made to the interface will diminish over time. This type of user testing serves as a debugging tool for user interfaces. Without testing, a user interface cannot be expected to work very well.

In this study we have dealt entirely with a command-line interface. There are other styles of interface that are widely believed to be inherently easier to use, such as menu interfaces or direct-manipulation interfaces. We have shown that the UDI procedure can yield large improvements in the ease of initial use of a command-line interface. Similar improvements might be possible for other interface styles by extending the UDI techniques. We believe that simply applying the latest technology does not insure a good human interface, Only careful, iterative design can do that.

Footnotes

1VAX, VMS, VT, RSTS, and PDP are trademarks of the Digital Equipment Corporation.

2Examples do not distinguish between commands that were handled by the parser and commands that required operator intervention. They show what appeared on the subject’s screen. Subject’s input appears in boldface type.

Acknowledgments

The UDI project was a group effort of the Software Human Engineering group at Digital, managed by Bill Zimmer. John Whiteside made the original UDI proposal and was project leader. The software was implemented by Jim Burrows and Michael Good. Jim Burrows assembled the LSI-11-based system and made it easy for the operators to use. Dennis Wixon, Sandra Jones, and John Whiteside scored the logs. Sandra Jones was the experimenter for most of the trials, with Dennis Wixon and Holly Whiteside assisting. Dennis Wixon served as the operator for more than half of the trials, with Bob Crowling also serving frequently in this role. We thank Mary Gleeton, manager of the Digital store in Manchester, and the staff of the store for their cooperation.

References

- Aho, A. V., and Ullman. J. D. Principles of Compiler Design. Addison-Wesley, Reading, Mass., 1977.

- Black, J. B., and Moran, T. P. Learning and remembering command names. In Proceedings of Human Factors in Computer Systems (Gaithersburg. Md., Mar. 15-17). ACM, New York, 1982, pp. 8-11.

- Bruder, J., Moy, M., Mueller, A., and Danielson, R. User experience and evolving design in a local electronic mail system. In Computer Message Systems, R.P. Uhlig, Ed. North-Holland, New York, 1981, pp. 69-78.

- Chapanis, A. Man-computer research at Johns Hopkins. In Information Technology and Psychology: Prospects for the Future, R. A. Kasschau. R. Lachman, and K. R. I.aughery, Eds. Praeger Publishers, New York, 1982, pp. 238-249.

- Ford. W. R. Natural-language processing by computer — A new approach. Ph.D. dissertation. Psychology Dept., The Johns Hopkins University, Baltimore, Md., 1981 (University Microfilms Order 8115709).

- Furnas. G. W., Landauer, T. K., Gomez. L. M., and Dumais, S. T. Statistical semantics: Analysis of the potential performance of key-word systems. Bell System Technical Journal 62, 6 (July-Aug. 1983), 1753-1806.

- Good, M. An ease of use evaluation of an integrated editor and formatter. Tech. Rep. TR-266, Laboratory for Computer Science, M.I.T., Cambridge. Mass., Nov. 1981.

- Goodwin, N. C. Effect of interface design on usability of message handling systems. In Proceedings of the Human Factors Society 26th Annual Meeting (Seattle, Wash., Oct. 25-29). Human Factors Society, Santa Monica, Calif., 1982, pp. 505-508.

- Gould. J. D., Conti, J., and Hovanyecz, T. Composing letters with a simulated listening typewriter. Commun. ACM 26, 4 (Apr. 1983), 295-308.

- Gould, J. D., and Lewis, C. Designing for usability — Key principles and what designers think. In Proceedings of the CHI ’83 Conference on Human Factors in Computing Systems (Boston, Mass., Dec. 12-15). ACM, New York, 1983, pp. 50-53.

- Hammer, J. M., and Rouse, W. B. The human as a constrained optimal editor. IEEE Trans. Syst. Man Cybern. SMC-12, 6 (Nov./Dec. 1982), 777-784.

- Kelley, J. F. An iterative design methodology for user-friendly natural language office information applications. ACM Trans. Off. lnf. Syst. 2, 1 (Jan. 1984), 26-41.

- Kernighan, B. W., and Ritchie, D. M. The C Programming Language. Prentice-Hall, Englewood Cliffs, N. J., 1978.

- Ledgard, H. F. A human engineered variant of BNF. S1GPLAN Not. 15, 10 (Oct. 1980), 57-62.

- Mack, R. L. Understanding text editing: Evidence from predictions and descriptions given by computer-naive people. Res. Rep. RC 10333, IBM Watson Research Center, Yorktown Heights, N.Y., Jan. 18, 1984.

- Malone, T. W. How do people organize their desks? Implications for the design of office information systems. ACM Trans. Off. Inf. Syst. 1, 1 (Jan. 1983), 99-112.

- Miller, D. C., and Pew. R. W. Exploiting user involvement in interactive system development. In Proceedings of the Human Factors Society 25th Annual Meeting (Rochester, N.Y., Oct. 12-16). Human Factors Society, Santa Monica, Calif., 1981, pp. 401-405.

- Morgan, C., Williams, G., and Lemmons, P. An interview with Wayne Rosing. Bruce Daniels. and Larry Tesler. Byte 8, 2 (Feb. 1983), 90-114.

- Osgood, C. E., Suci, G. J., and Tannenbaum, P. H. The Measurement of Meaning. Univ. of Illinois Press, Urbana, Ill., 1957.

- Roberts, T. L., and Moran, T. P. The evaluation of text editors: Methodology and empirical results. Commun. ACM 26, 4 (Apr. 1983), 265-283.

- Tesler, L. Enlisting user help in software design. SIGCHI Bull. 14, 1 (Jan. 1983), 5-9.

- Wagreich, B. J. Electronic mail for the hearing impaired and its potential for other disabilities. IEEE Trans. Commun. COM-30, 1 (Jan. 1982), 58-65.

- Whiteside, J., and Wixon, D. Developmental theory as a framework for studying human-computer interaction. In Advances in Human-Computer Interaction, H. R. Hartson, Ed. Ablex Publishing, Norwood, N.J. To be published.

CR Categories and Subject Descriptors: D.2.2 [Software Engineering]: Tools and Techniques — user interfaces; H.1.2 [Models and Principles]: User/Machine Systems — human factors; H.4.3 [Information Systems Applications]: Communications Applications — electronic mail

General Terms: Experimentation, Human Factors

Additional Key Words and Phrases: user-derived interface, iterative design. command-language design, human-computer interaction

Received 6/83; revised 6/84; accepted 6/84

Copyright © 1984 by the Association for Computing Machinery, Inc. Permission to make digital or hard copies of part or all of this work for personal or classroom use is granted without fee provided that copies are not made or distributed for profit or commercial advantage and that copies bear this notice and the full citation on the first page. Copyrights for components of this work owned by others than ACM must be honored. Abstracting with credit is permitted. To copy otherwise, to republish, to post on servers, or to redistribute to lists, requires prior specific permission and/or a fee. Request permissions from Publications Dept, ACM Inc., fax +1 (212) 869-0481, or permissions@acm.org.

This is a digitized copy derived from an ACM copyrighted work. ACM did not prepare this copy and does not guarantee that is it an accurate copy of the author’s original work.